In 2024, Google struck a $60M licensing deal with Reddit to access Reddit’s content firehose. And this was essentially an API pipeline into all of Reddit’s public posts.

Why this matters

Google is in the middle of an AI revolution that could destroy its search engine business model and threaten its biggest earner which is largely made up of advertising revenue, which amounted to $264.59 billion U.S. dollars in 2024. So this could be a life and death struggle for Google. And AI is the monster that feeds on vast amounts of data and information for its large language models for its Gemini Chatbot . This is where Reddit comes in.

But humans want information that is credible and trustworthy. But both AI slop and Human slop are creating doubt and misinformation online. But more about that later.

So Google is caught between the past and the future. Search engines are the past but still working and are rivers of gold for the moment and the future is the new “AI answer engines” tech that are disrupting the last 2 decades of the web.

So it needs to protect search and lean into AI. And AI and its large language models need content. And Reddit provides that human created content.

So this is leading to the creation of Human Slop (Misinformation, fake news, conspiracy theories and much more that are created on forums like Reddit and Quora and on Social media) and AI Slop (AI slop is the byproduct of generative models churning out low-quality content at scale).

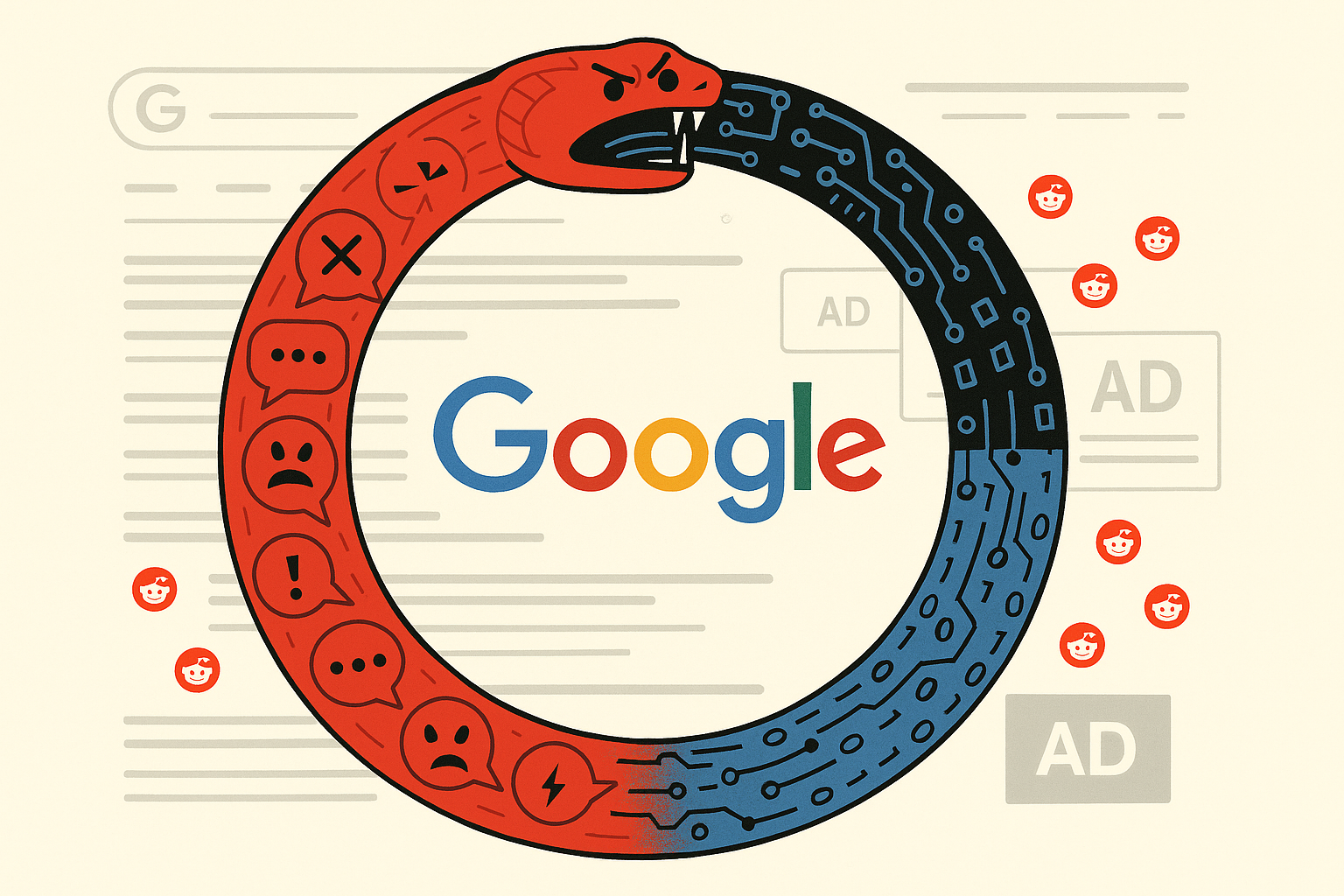

And they then both feed off each other in a content race to the bottom. This appears as AI and human content slop

So why is Google paying Reddit for access to its content?

Here are 3 reasons:

1) Google needs Human Signal Injection for AI training

Google needed access to what it considers “real human discourse” — messy, emotional, contradictory, and unfiltered — to improve its LLMs (like Gemini).

Reddit provides an abundance of real-world user opinions, advice, and questions, which can fill gaps in LLM training data, especially around niche questions, emerging trends, and longtail queries.

2) It needs to deploy a defensive strategy against OpenAI and others

Google needs to strike deals with publishers: OpenAI and Microsoft already struck deals with publishers. Google needed data that wasn’t from mainstream media or encyclopedic sources.

And Reddit is a unique domain where search intent and conversation overlap. It’s a goldmine of “authentic” queries and human language patterns.

3) There is a search decline panic

Reddit results increasingly appear with “Reddit” tagged onto Google searches (e.g., “best camera for travel Reddit”). Google noticed users trust forum-based content more than commercial SEO slop. So, they bought access to juice their own LLMs, not just their search results.

Ironically, in trying to combat AI-generated slop, Google licensed one of the biggest contributors to human slop.

What is AI slop?

AI slop is the byproduct of generative models churning out low-quality content at scale. Think of the thousands of articles spun up daily that answer “What time is the Super Bowl?” or “How to boil an egg” with robotic blandness. It’s filler text optimized not for insight but for SEO clicks and ad impressions.

AI slop looks authoritative—formatted neatly, keyword-rich, SEO-tuned—but it’s hollow. It lacks lived experience, context, or critical judgment. It’s text generated to game algorithms, not to inform or inspire people.

The danger? When the web becomes dominated by slop, even the best AI models will only have slop to learn from. It’s the digital equivalent of feeding junk food to an athlete and wondering why performance declines.

What is Human slop?

Human slop has been around much longer. It’s the messy underbelly of forums, comment sections, and conspiracy-driven threads. It’s Reddit rants passed off as fact. It’s Quora answers confidently asserting nonsense. It’s Facebook groups where pseudoscience is treated as gospel.

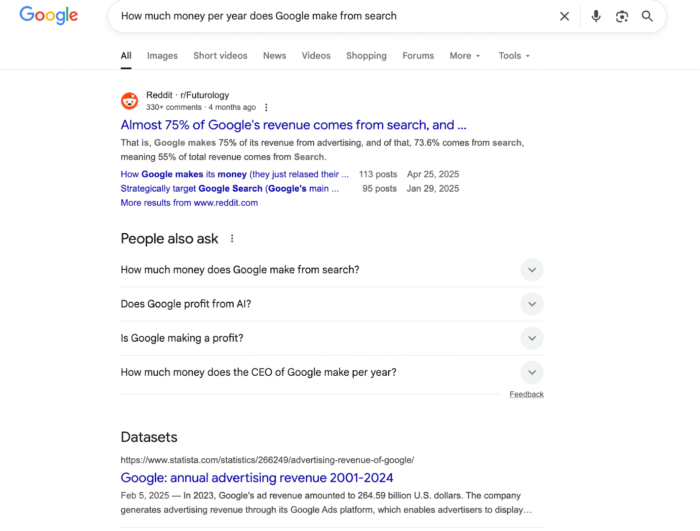

In researching for this article the ranking of Reddit above a credible source such as Statista was made clear. I typed in the question “How much money per year does Google make from search?”

Reddit ranked #1 and Statista was #2 globally and below that sat Quora at #3. I would classify Statista as a very credible source but I would put Reddit and Quora as having generally low credibility and lacking credible research chops! I would put them both in the “Human Content Slop” category. Just as dangerous as getting your news from Facebook!

Unlike AI slop, which is algorithmic mimicry, human slop is driven by ego, ignorance, and incentives. People crave belonging, attention, and validation. And when platforms reward hot takes, half-truths, and emotional bait, we shouldn’t be surprised when credibility gets drowned out.

Human slop thrives in places where moderation is lax, verification is absent, and virality is rewarded.

And this includes social media and forums like Reddit and Quora.

Sound familiar?

Google’s slop economy

Here’s where things get truly twisted: Google, the company that once promised to “organize the world’s information,” now makes billions amplifying both AI and human slop.

1. Paying for human slop

Reports suggest Google inked deals worth tens of millions to pull Reddit data into its training pipelines. Why? Because Google sees Reddit as a goldmine of “human signals.” But let’s be honest: much of Reddit is speculation, myth, and bias—content written not by experts but by whoever shouted loudest in a thread.

By elevating Reddit as a source of “real human knowledge,” Google isn’t curating credibility. It’s laundering human slop through the prestige of its search results.

2. Feeding on AI slop

At the same time, Google profits from the SEO spam economy—websites littered with auto-generated content. Entire networks of publishers now exist solely to pump out AI-written pages that rank in search, load up with ads, and vanish into irrelevance.

Google pretends to fight this with updates targeting “low-quality content.” But the reality? The slop keeps ranking, because slop generates clicks, and clicks generate revenue.

3. Double-dipping on slop

This is the kicker: AI models are trained on human slop (forums, comments, low-grade blogs), while humans are fed AI slop in search results. The system is circular. And who sits in the middle of this feedback loop, collecting rent at every turn? Google.

The myth of credibility

Google has always sold itself as a curator of knowledge. PageRank, the original algorithm, was supposed to surface the best, most authoritative sources. But today, authority is a façade.

- A thread on Reddit with enough upvotes gets elevated to “credible human input.”

- A blog written entirely by AI can still rank if it hits the right SEO beats.

- News outlets churn out content farms that are indistinguishable from AI spam, and Google rewards them with traffic.

The line between knowledge and noise has collapsed. And yet, the ads keep running.

Why this matters

We’re not just talking about a little extra spam. The slop problem has deep consequences:

- Training Pollution: Future AI models trained on today’s internet will ingest more garbage than gold. This accelerates the degradation of machine intelligence itself.

- Trust Erosion: People already distrust institutions. When Google serves up contradictory nonsense—Reddit threads masquerading as fact next to AI-written drivel—it deepens public cynicism.

- Knowledge Collapse: Once the web becomes mostly noise, the cost of finding truth skyrockets. This creates an advantage for closed systems (like paywalled research or private databases) while the public commons decays.

- Profit Over Public Good: Google has the resources to lead in building credibility systems. Instead, it doubles down on monetizing the very content that undermines trust.

The Google paradox

Here’s the paradox: Google needs to appear like it’s cleaning up the web while actually thriving on the mess.

- It punishes small creators with endless algorithm changes but quietly allows corporate content farms to flood results.

- It lectures about misinformation while funding the very platforms that spread it.

- It talks about AI safety while feeding AI models polluted data streams.

In short: Google doesn’t solve slop; it monetizes slop.

Historical echoes

We’ve been here before. Remember the yellow journalism era of the late 19th century? Newspapers realized sensationalism sold better than sober reporting. Headlines became louder, facts looser, and public discourse more chaotic.

Google’s current trajectory is a digital replay. The incentives aren’t aligned with truth, only with engagement. And engagement is measured as “time on site” and more.

And just like then, society pays the price in confusion, polarization, and erosion of trust.

Why people don’t notice slop

Slop works because it doesn’t look like slop.

- AI slop is grammatically correct, neatly structured, and eerily competent at mimicking authority.

- Human slop feels authentic because it comes with anger, humor, or vulnerability i.e. qualities machines struggle to fake convincingly.

Both bypass our defenses in different ways. Both get boosted by algorithms that optimize for time-on-page and click-through rate. And both keep us coming back for more.

The future of slop

If nothing changes, the internet becomes a slop swamp. Here’s a plausible trajectory:

- By 2027, 80% of web content is predicted to be AI-generated, much of it indistinguishable from spam.

- Forums and communities become increasingly polluted as humans mimic AI patterns and AI mimics humans.

- Search results become a carousel of slop feeding slop, with credibility evaporating as a public standard.

At that point, truth becomes a premium product, locked behind paywalls, private AI agents, or niche platforms with heavy moderation. The open web, once the library of Alexandria for the digital age, risks becoming a landfill.

Google’s slop addiction

The uncomfortable truth is that Google is addicted to slop. It can’t afford to stop feeding on it because slop scales. It’s cheap to produce, easy to monetize, and endlessly renewable.

As long as advertisers pay for eyeballs, and as long as slop generates those eyeballs, Google will keep enabling it. Credibility is expensive. Slop is profitable. Guess which one wins in Silicon Valley boardrooms?

Final thoughts

We are heading fast towards a slop-centered web

We are standing at a strange moment in internet history. AI slop and human slop are converging, not diverging. The machines produce infinite volumes of plausible-sounding filler. The humans produce endless streams of biased, emotional noise. And Google stitches both together, sprinkles ads on top, and calls it “organizing the world’s information.”

It’s not organization. It’s monetized chaos.

The tragedy is not just that the web is noisier…it’s that the very company with the power to fix it has chosen to profit from it instead. And unless we wake up to the costs, the slop will only deepen, until the web itself becomes something we no longer trust.